What is a Neural Network?

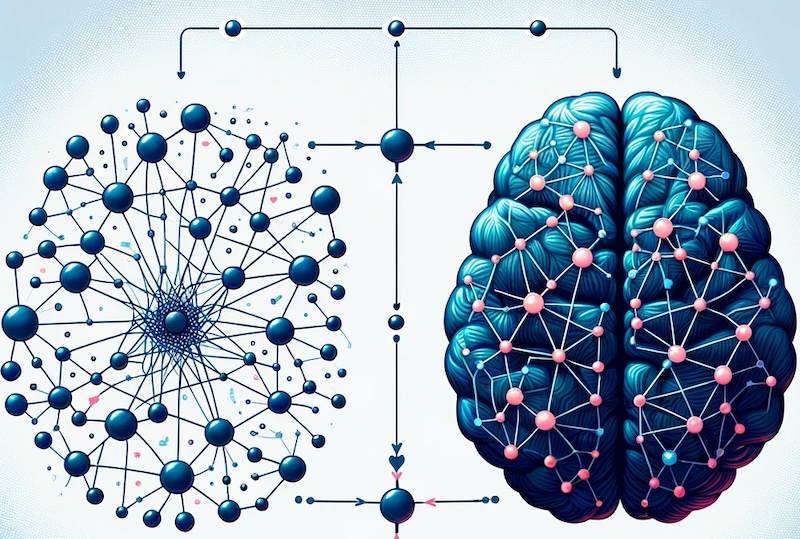

Important This page is here to give readers a starting point only. Links to more authoritative material are at the end of the page.[1] Neural Networks have parallels to the human brain. Our brain uses a vast network of neurons to process information. Neural networks use interconnected nodes in a similar way to perform complex computations. Nodes are arranged in many layers and the model's parameters or weights is the source of emerging intelligent action. To give an idea of size, there may be hundreds of billions of such connections in a powerful model. At this point in time the human brain remains far more complex!

[2] Layers of a Neural Network. The input layer receives data, hidden layers process it, and the output layer delivers the result. Each layer detects different features of the data, contributing to the network's decision-making process. In a language model it starts for example by detecting words, sentences and paragraphs and their meaning. Given enough training data it then starts to show deeper understanding in ways that can be unexpected and impressive. These are usually termed emergent properties. Indeed the network's ability to integrate learned information in novel and often sophisticated ways has been one of the most important developments in recent times.

... something like this ... (which was apparently made by brilliant.org which is worth a look.)

Amazing graphical representation of a neural net, never seen anything like it. pic.twitter.com/1xUQHAiq50

— Gabriel Elbling (@gabeElbling) October 26, 2024

[3] Large neural networks.

Convolutional Neural Networks. These are adept at identifying patterns and features in visual data and so are often used for complex image recognition tasks, such as identifying specific objects within an image or scene. An early example had 60 million parameters and 8 layers.

Recurrent Neural Networks and Long Short-Term Memory Networks. These are often used for speech recognition and natural language processing. The latter is designed to remember long-term dependencies which is useful in these applications.

Transformers. Networks like the GPT (Generative Pre-trained Transformer) series for text generation can have up to billions of parameters and have pushed the boundaries of natural language understanding. The Transformer architecture makes use of attention mechanisms that allow them to weigh the importance of different parts of the input data.

[4] Training data size. As an example of what training a neural network involves, the training data for the Generative Pre-trained Transformer (GPT) series is vast and varied, underscoring the immense scale at which these models learn. It includes websites and other text sources such as scientific papers, fiction, non-fiction and news articles, encompassing a wide array of subjects, languages, and writing styles. The sheer volume can be measured in many billions of words and requires a huge level of computing resource. This ensures that the models are exposed to nearly every conceivable topic, allowing them to generate responses that are rich in knowledge across domains.

[5] Trained but not perfect. A neural net does not immediately become useful to end users simply because it has seen a lot of data. Issues around this (biases in training data, ethical considerations, defamation or libel by an AI model etc) are dealt with on the AI Ethics area on this site.

[6] Want to know more? The links below provide enough information to turn you into an expert in Deep Learning and Neural Nets. Please do let us know (email at bottom of home page) if you know of a further resource we should add, or if any of the links below have problems of any kind.