What is the 'Diffusion Method' of producing images?

How do diffusion based AI art tools create a detailed scene from a prompt such as "squirrel fishing by a river"?

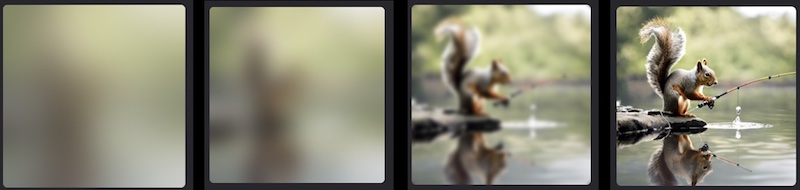

[1] Random noise. The diffusion model starts by generating a digital 'canvas' filled with pure random noise — similar to static on an old television screen. This noise comprises randomly distributed pixel values with no inherent structure or meaning. A 'seed' value, such as 934743, is used to introduce a specific pattern of randomness, ensuring that each seed generates a unique (repeatable) noise image.

[2] The Diffusion Process. The diffusion model then iteratively transforms this initial noise into a coherent image through a series of denoising steps. At each step, the model tries to remove some of the noise and introduce structure based on the input prompt ("squirrel fishing by a river"). This is achieved by a deep neural network that has been trained on a vast dataset of images and their corresponding text descriptions.

The neural network analyzes the current state of the image and the input prompt, and predicts the next step in the denoising process. This prediction is based on the patterns and relationships it has learned from the training data. The model then applies this prediction to the image, gradually refining and clarifying the relevant shapes, textures, and colors that align with the desired prompt.

The following set of images shows the progression from noise to image.

The latest AI art tools seem to have genuine artistic ability beyond anything that was generally expected a few years ago. In the same way that humans sometimes imagine faces and other shapes in clouds and vegetables etc, it looks at the canvas and it works to develop the dark and light areas to produce the needed shapes and colours. It then goes far far beyond that.

It's important to note that the diffusion process is highly complex and involves advanced mathematical concepts from fields like probability theory and differential equations. However, at its core, it leverages the power of deep learning and neural networks to transform random noise into visually coherent and semantically meaningful images based on textual prompts.

[3] The Final Version. The transformation is complete and there is now a detailed and vibrant scene of a squirrel fishing by a river. When the tool produces multiple different images it is using multiple seeds (934743, 656232, 545488 etc). If instead the user wants to repeat the same basic image then the seed is reused.

[4] Other Methods. The diffusion method is one of several techniques used for generating images with deep learning models. It has gained significant popularity recently but there are other approaches including Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

[5] Want to know more? The links below provide enough information to turn you into an expert in Diffusion Models. Please do let us know (email at bottom of home page) if you know of a further resource we should add, or if any of the links below have problems of any kind.